A/B Testing Mistakes You’re Making: How to Avoid Them

A/B testing is a powerful tool for optimization, but it can also be tricky and making mistakes during A/B tests can lead to inaccurate data, wasted money and wrong conclusions.

To avoid these mistakes, it’s important to understand the basics of A/B testing and to design your tests carefully. Here are the four most common mistakes to avoid when A/B testing:

Mistake #01: Not Having a Control Group

When you’re running an A/B test, it’s important to have a control group. This is the group of users who aren’t participating in the test, and who are used as a baseline for comparison. Without a control group, you won’t be able to tell whether any changes you see are due to the test or just random variation.

However, there are some situations where you might not want to use a control group. For example, if you’re testing a new feature that’s only available to a limited number of users, you might not have enough people to form a control group. In this case, you’ll need to rely on other data sources, such as analytics data, to determine whether the change is due to the test or not.

Mistake #02: Testing Too Many Variables

When you’re running an A/B test, it’s important to keep the number of variables you’re testing to a minimum. If you test too many variables, you won’t be able to determine which ones had an impact on the results. By limiting the scope of your tests, you can ensure that you’re gathering accurate data that will help you make better decisions about your design.

Mistake #03: Not Waiting for Statistical Significance

Many businesses rely on A/B testing to make decisions about their products and websites. However, it’s important to remember that A/B tests can only provide reliable results if they are allowed to run until they reach statistical significance. This means that there is a 95% chance that any differences between the two groups are due to the changes that were made, and not just random variation.

While it can be tempting to make decisions based on early results, this is not always accurate. Therefore, it’s important to wait for the results of an A/B test to reach statistical significance before drawing any conclusions.

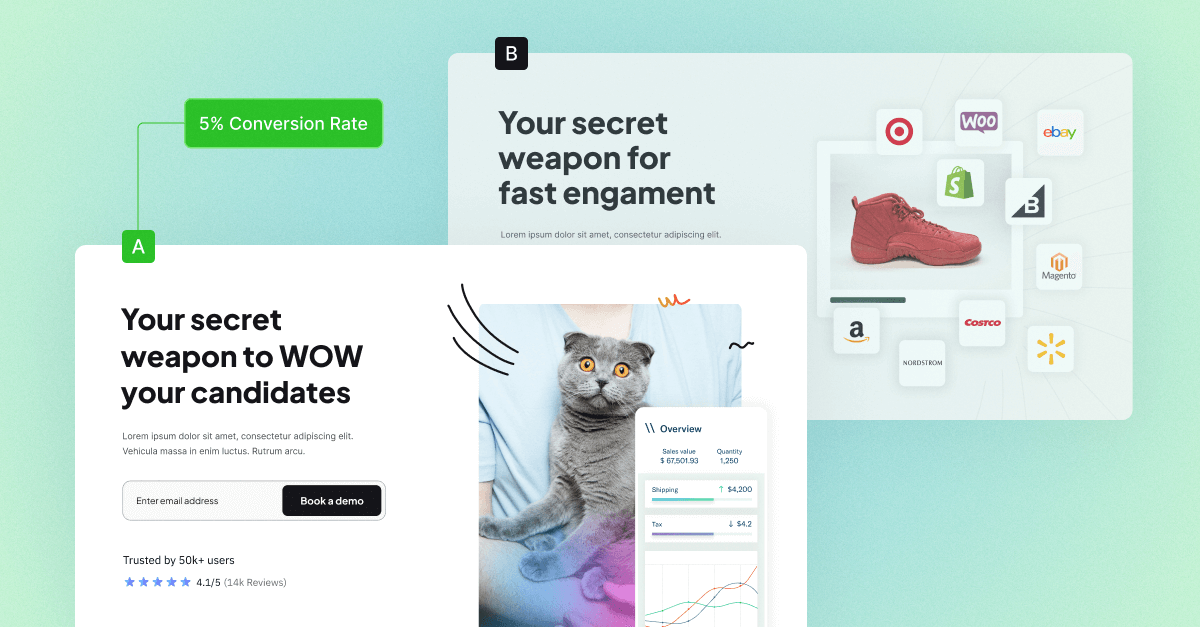

Mistake #04: Relying on Averages Alone

Averages can be misleading and this is especially true when it comes to A/B testing. When you’re looking at the results of an A/B test, it’s important to look at the individual results for each variant, not just the average.

The average can hide important differences between the variants. For example, let’s say you’re testing two variants of a landing page. Variant A has a conversion rate of 5%, and variant B has a conversion rate of 10%. The average conversion rate is 7.5%. But that doesn’t tell the whole story. If variant A has a conversion rate of 5% and variant B has a conversion rate of 10%, that means that variant B is twice as successful as variant A.

A/B testing is a powerful way to increase your website’s performance, but only if you’re doing it correctly. Make sure you avoid these four common mistakes so that you can get the most out of your tests and improve your business results.

If you’re stuck and not sure how to get started with A/B testing, or need a hand to get your A/B testing programme back on track, let us know as we’d love to help!